I bought this excellent book written by Tariq Rashid in German. The title in German is «Neuronale Netze selbst programmieren». The sample code in the book is written in Python. There exists an implementation for Python on the macOS. But the standard language on macOS is Swift. So I wrote an implementation in Swift. I assume that you read the book, either in English or German. The code is the final version with multiple epochs (five). So I explain my version in Swift. I made a new project in Xcode 10.1 and chose the Application «Command Line Tool». The whole code lies in the file «main.swift». You can download the whole code under https://github.com/michabuehlmann/Neural-Network. There is a file called Neural Network 2.zip which you should download. You need also the MNIST data set. You have to copy the files mnist_test.csv and mnist_train.csv to your download directory (important!). Then you can run the program. It should work the same way like the original program written in Python. I got a performance of 0.963.

The class Matrix

I wrote a class called Matrix. It implements the functions Python offers in the numpy library. It covers a workaround for mathematical matrices. The data is stored in the variable «data» with variables for the number of rows and columns:

class Matrix: CustomStringConvertible {

internal var data: [[Double]]

var rows: Int

var columns: Int

There are several init functions for the matrix class:

init(_ data:[[Double]]) {

self.data = data

self.rows = data.count

self.columns = data.first?.count ?? 0

}

init(_ data:[[Double]], rows:Int, columns:Int) {

self.data = data

self.rows = rows

self.columns = columns

}

init(rows:Int, columns:Int) {

self.data = [[Double]](repeating: [Double](repeating: 0, count: columns), count: rows)

self.rows = rows

self.columns = columns

}

You can use these functions like in the following examples:

let wih = Matrix([[0.9, 0.3, 0.4], [0.2, 0.8, 0.2], [0.1, 0.5, 0.6]])

let who = Matrix([[0.3, 0.7, 0.5], [0.6, 0.5, 0.2], [0.8, 0.1, 0.9]])

let x = Matrix([[10.0, 9.0, 8.0], [3.0, 2.0, 1.0]], rows: 2, columns: 3)

let y = Matrix([[1, 2, 3], [4, 5, 6]], rows: 2, columns: 3)

let z = Matrix([[1, 2], [3, 4], [5, 6]], rows: 3, columns: 2)

let xx = Matrix([[1,2], [3,4]], rows: 2, columns: 2)

let yy = Matrix([[5,6], [7,8]], rows: 2, columns: 2)

The next functions allow the use of individual elements with the subscript syntax:

subscript(row: Int, column: Int) -> Double {

get {

return data[row][column]

}

set {

data[row][column] = newValue

}

}

This allows us to use an element of a matrix called wih with index row and col.

wih[row,col]

The next functions allows to get the dimensions, the number of rows and columns and the elements of the matrix.

var dimensions: (rows: Int, columns: Int) {

get {

return (data.count, data.first?.count ?? 0)

}

}

var rowCount: Int {

get {

return data.count

}

}

var columnCount: Int {

get {

return data.first?.count ?? 0

}

}

var count: Int {

get {

return rows * columns

}

}

The next function allows to print a matrix.

var description: String {

var dsc = ""

for row in 0..<rows {

for col in 0..<columns {

let d = data[row][col]

dsc += String(d) + " "

}

dsc += "\n"

}

dsc += "rows: \(rows) columns: \(columns)\n"

return dsc

}

You use this function like this:

print(z)

The output of this is the following:

1.0 2.0

3.0 4.0

5.0 6.0

rows: 3 columns: 2

I wrote a function to paint a matrix, but this needs a little more programming. So I just show the function without the additional programming.

func zeichne() {

let context = NSGraphicsContext.current?.cgContext;

//let shape = "square"

context!.setLineWidth(1.0)

for col in 0..<self.columns {

for row in 0..<self.rows {

let color = CGFloat(1.0/(7.0-self[row,col]));

//print(color)

context!.setStrokeColor(red: color, green: color, blue: color, alpha: 1)

context!.setFillColor(red: color, green: color, blue: color, alpha: 1)

let rectangle = CGRect(x: col*10, y: row*10, width: 10, height: 10)

context!.addRect(rectangle)

context!.drawPath(using: .fillStroke)

}

}

}

The next function returns the index of the biggest value in a vector.

func maximum() -> Int {

var data = 0.0

var index = 0

for row in 0..<self.rows {

if self[row,0] > data {

data = self[row,0]

index = row

}

}

return index

}

Now comes an amount of functions to calculate with matrices. They are programmed as operators. So you can use this functions like in mathematics. First there is the plus operator that adds two matrices together.

static func +(left: Matrix, right: Matrix) -> Matrix {

assert(left.dimensions == right.dimensions, "Cannot add matrices of different dimensions")

let m = Matrix(left.data, rows: left.rows, columns: left.columns)

for row in 0..<left.rows {

for col in 0..<left.columns {

m[row,col] += right[row,col]

}

}

return m

}

The use of this operator is this:

let a = x+y print(a)

The output is:

11.0 11.0 11.0

7.0 7.0 7.0

rows: 2 columns: 3

Next there is the += operator, which adds the right matrix to the left one.

static func +=(left: Matrix, right: Matrix) {

assert(left.dimensions == right.dimensions, "Cannot add matrices of different dimensions")

for row in 0..<left.rows {

for col in 0..<left.columns {

left[row,col] += right[row,col]

}

}

}

Now the equivalent operator minus (-). It subtracts the right matrix from the left one.

static func -(left: Matrix, right: Matrix) -> Matrix {

assert(left.dimensions == right.dimensions, "Cannot add matrices of different dimensions")

let m = Matrix(left.data, rows: left.rows, columns: left.columns)

for row in 0..<left.rows {

for col in 0..<left.columns {

m[row,col] -= right[row,col]

}

}

return m

}

Next there is a function that subtracts the matrix right from a double value left.

static func -(left: Double, right: Matrix) -> Matrix {

let m = Matrix(rows: right.rows, columns: right.columns)

for row in 0..<right.rows {

for col in 0..<right.columns {

m[row,col] = left - right[row,col]

}

}

return m

}

The next operator multiplies the values from the left matrix with the values of the right matrix. It isn’t the ordinary matrix multiplication (also called dot product) which follows further.

static func *(left: Matrix, right: Matrix) -> Matrix {

assert(left.dimensions == right.dimensions, "Cannot add matrices of different dimensions")

let m = Matrix(left.data, rows: left.rows, columns: left.columns)

for row in 0..<left.rows {

for col in 0..<left.columns {

m[row,col] *= right[row,col]

}

}

return m

}

Like the function above with the minus operator the next function (operator) multiplies a double value called left with the matrix called right.

static func *(left: Double, right: Matrix) -> Matrix {

let m = Matrix(right.data, rows: right.rows, columns: right.columns)

for row in 0..<right.rows {

for col in 0..<right.columns {

m[row,col] *= left

}

}

return m

}

Now follows the compare operator ==. It returns true if the two matrices are equivalent. Otherwise it returns false.

static func ==(left: Matrix, right: Matrix) -> Bool {

if left.rows != right.rows {

return false

}

if left.columns != right.columns {

return false

}

for i in 0..<left.rows {

for j in 0..<left.columns {

if left[i,j] != right[i,j] {

return false

}

}

}

return true

}

You know the transpose of a matrix? The operator ^ returns the transpose of the matrix m.

static postfix func ^(m: Matrix) -> Matrix {

let t = Matrix(rows:m.columns, columns:m.rows)

for row in 0..<m.rows {

for col in 0..<m.columns {

t[col,row] = m[row,col]

}

}

return t

}

The use of this operator is the following:

let b = x^ print(b)

The output:

10.0 3.0

9.0 2.0

8.0 1.0

rows: 3 columns: 2

To finish the class matrix it follows the implementation of the matrix multiplication (or dot product). The operator we use is the **.

static func **(left: Matrix, right: Matrix) -> Matrix {

assert(left.columns == right.rows, "Two matricies can only be matrix mulitiplied if one has dimensions mxn & the other has dimensions nxp where m, n, p are in R")

let C = Matrix(rows: left.rows, columns: right.columns)

for i in 0..<left.rows {

for j in 0..<right.columns {

for k in 0..<right.rows {

C[i, j] += left[i, k] * right[k, j]

}

}

}

return C

}

You use the operator ** like this:

let c = z**y print(c)

The output is:

9.0 12.0 15.0

19.0 26.0 33.0

29.0 40.0 51.0

rows: 3 columns: 3

The class NeuralNetwork

Here comes the class NeuralNetwork with the following properties:

class NeuralNetwork {

internal var inodes: Int

internal var hnodes: Int

internal var onodes: Int

internal var lr: Double

internal var wih: Matrix

internal var who: Matrix

internal var r: UInt64 = 0

Like always there is an init function:

init(inputnodes: Int, hidddennodes: Int, outputnodes: Int, learningrate: Double) {

self.inodes = inputnodes

self.hnodes = hidddennodes

self.onodes = outputnodes

self.lr = learningrate

self.wih = Matrix(rows: self.hnodes, columns: self.inodes)

self.who = Matrix(rows: self.onodes, columns: self.hnodes)

for row in 0..<wih.rows {

for col in 0..<wih.columns {

arc4random_buf(&self.r, 8)

wih[row,col] = (Double(self.r) / Double(UInt64.max)) - 0.5

}

}

for row in 0..<who.rows {

for col in 0..<who.columns {

arc4random_buf(&self.r, 8)

who[row,col] = (Double(self.r) / Double(UInt64.max)) - 0.5

}

}

}

There is a definition of the two matrices wih and who. Afterwards they get some random values like mentioned in the book.

Because we need several times the sigmoid function we define it here.

func sigmoid(_ x: Double) -> Double {

return 1.0 / (1.0 + exp(-x))

}

The next routine is the activation function that applies the sigmoid value to the matrix list

func activation_function(_ list: Matrix) -> Matrix {

let result = Matrix(rows: list.rows, columns: list.columns)

for i in 0..<list.rows {

result[i,0] = sigmoid(list[i,0])

}

return result

}

Now comes the method query. It takes the transpose of the vector input_list. Then we multiply the matrix wih with the inputs. The result is called hidden_inputs. These are activated with the activation_function. The same is done with the multiplication of who with the hidden_outputs and the application of the activation_function to the final_inputs. The result are the final_outputs which are returned by the query method.

func query(_ input_list: Matrix) -> Matrix {

let inputs = input_list^

let hidden_inputs = wih**inputs

let hidden_outputs = activation_function(hidden_inputs)

let final_inputs = who**hidden_outputs

let final_outputs = activation_function(final_inputs)

return final_outputs

}

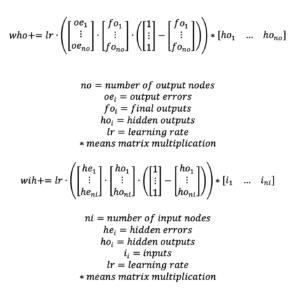

Next follows the train method. It is described detailed in the book. Here is just a brief summary. First we take the transposes of the input_list and the target_list. The hidden_inputs are the matrix multiplication of wih and the inputs. The hidden_outputs are the activated hidden_inputs. Afterwards follows the computation with the final inputs and outputs. They are calculated the same way as the hidden inputs and outputs. Then there is the weight update. Here is a summary of the weight update process:

You can see the process in swift in the following code. It uses the operators we programmed in the matrix class.

func train(input_list: Matrix, target_list: Matrix) {

let inputs = input_list^

let targets = target_list^

let hidden_inputs = wih**inputs

let hidden_outputs = activation_function(hidden_inputs)

let final_inputs = who**hidden_outputs

let final_outputs = activation_function(final_inputs)

let output_errors = targets - final_outputs

let hidden_errors = (who^)**output_errors

self.who += self.lr * ((output_errors * final_outputs * (1.0 - final_outputs)) ** (hidden_outputs^))

self.wih += self.lr * ((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)) ** (inputs^))

}

The main part of the program

Now we program the main function.

First we declare some constants (in Swift we use let):

let input_nodes = 784 let hidden_nodes = 100 let output_nodes = 10 let learning_rate = 0.2

Then we declare an instance of the class NeuralNetwork, called n

let n = NeuralNetwork(inputnodes: input_nodes, hidddennodes: hidden_nodes, outputnodes: output_nodes, learningrate: learning_rate)

Next we load the mnist training data CSV file with 60’000 records. It has to be in the download directory!

let training_data_file = "mnist_train"

let DocumentDirURL1 = try! FileManager.default.url(for: .downloadsDirectory, in: .userDomainMask, appropriateFor: nil, create: false)

let fileURL1 = DocumentDirURL1.appendingPathComponent(training_data_file).appendingPathExtension("csv")

Then we load the data into a list.

var training_data_list = ""

do {

training_data_list = try String(contentsOf: fileURL1)

} catch let error as NSError {

print(error)

}

Now we split the data into lines, separated by the «\n» code.

var lines = training_data_list.split() { $0 == "\n" }.map { $0 }

Afterwards we declare two matrices and the number of epochs (here 5).

var inputs = Matrix(rows: 1, columns: input_nodes) var targets = Matrix(rows: 1, columns: output_nodes) let epochs = 5

We go through all records in the training data set. Split the record by the «,» commas.

for j in 0..<epochs {

for line in 0..<lines.count {

var all_values = lines[line].split() { $0 == "," }.map { Int($0)! }

After each 100 records we print an » – » and the line number.

if (line % 100 == 0) {

print(j, " - ", line)

}

Scale and shift the inputs:

for i in 0..<input_nodes {

inputs[0,i] = (Double(all_values[i+1]) / 255.0 * 0.99) + 0.01

}

Create the target output values (all 0.01, except the desired label which is 0.99).

for i in 0..<output_nodes {

targets[0,i] = 0.01

}

targets[0,all_values[0]] = 0.99

Last but not least we train the neural network with the identical method of the neural network n.

n.train(input_list: inputs, target_list: targets)

Here again the whole training loop:

for j in 0..<epochs {

for line in 0..<lines.count {

var all_values = lines[line].split() { $0 == "," }.map { Int($0)! }

if (line % 100 == 0) {

print(j, " - ", line)

}

for i in 0..<input_nodes {

inputs[0,i] = (Double(all_values[i+1]) / 255.0 * 0.99) + 0.01

}

//print(inputs)

for i in 0..<output_nodes {

targets[0,i] = 0.01

}

targets[0,all_values[0]] = 0.99

//print(targets)

n.train(input_list: inputs, target_list: targets)

}

}

Load the mnist test data CSV file with 10’000 records. Again: it has to be in the download directory!

let test_data_file = "mnist_test"

let DocumentDirURL2 = try! FileManager.default.url(for: .downloadsDirectory, in: .userDomainMask, appropriateFor: nil, create: false)

let fileURL2 = DocumentDirURL2.appendingPathComponent(test_data_file).appendingPathExtension("csv")

Then we load the data into a list.

var test_data_list = ""

do {

test_data_list = try String(contentsOf: fileURL2)

} catch let error as NSError {

print(error)

}

Now we split the data into lines, separated by the «\n» code.

lines = test_data_list.split() { $0 == "\n" }.map { $0 }

scorecard for how well the network performs, initially empty.

var scorecard = [Int]()

We go through all records in the training data set.

for line in 0..<lines.count {

After each 100 lines we print the line number.

if (line % 100 == 0) {

print(line)

}

Split the record by the «,» commas

var all_values = lines[line].split() { $0 == "," }.map { Int($0)! }

Correct answer is the first value

var correct_label = Int(all_values[0])

Scale and shift the inputs:

for i in 0..<input_nodes {

inputs[0,i] = (Double(all_values[i+1]) / 255.0 * 0.99) + 0.01

}

Query the network

var outputs = Matrix(rows: output_nodes, columns: 1) outputs = n.query(inputs)

The index of the highest value corresponds to the label

let label = outputs.maximum()

Append correct or incorrect to list

if (label == correct_label) {

Network’s answer matches correct answer, add 1 to scorecard

scorecard.append(1)

Network’s answer doesn’t match correct answer, add 0 to scorecard

} else {

scorecard.append(0)

}

Here again the whole test loop:

for line in 0..<lines.count {

if (line % 100 == 0) {

print(line)

}

var all_values = lines[line].split() { $0 == "," }.map { Int($0)! }

var correct_label = Int(all_values[0])

//print("\(correct_label) correct_label")

for i in 0..<input_nodes {

inputs[0,i] = (Double(all_values[i+1]) / 255.0 * 0.99) + 0.01

}

var outputs = Matrix(rows: output_nodes, columns: 1)

outputs = n.query(inputs)

//print(outputs)

let label = outputs.maximum()

//print("\(label) network's answer")

if (label == correct_label) {

scorecard.append(1)

} else {

scorecard.append(0)

}

}

Calculate the performance score, the fraction of correct answers.

let sum = scorecard.reduce(0, +)

let performance = Double(sum) / Double(scorecard.count)

print("performance = \(performance)" )

I’m getting the following output:

performance = 0.963

Program ended with exit code: 0

So this is it! Have fun with this program code.

Neueste Kommentare